Advanced speech-to-text for institutions, optimized for research terminology and noisy field audio

Domain-trained models tuned for research terminology and noisy environments

Privacy and data residency safeguards designed for institutional requirements

Edit results and use Slack/Google Chat integrations for team workflows

Accurate transcription in any language, including mixed-language dialogues

Uploading interviews through to exporting final transcripts, every step is designed to make research transcription fast and accurate

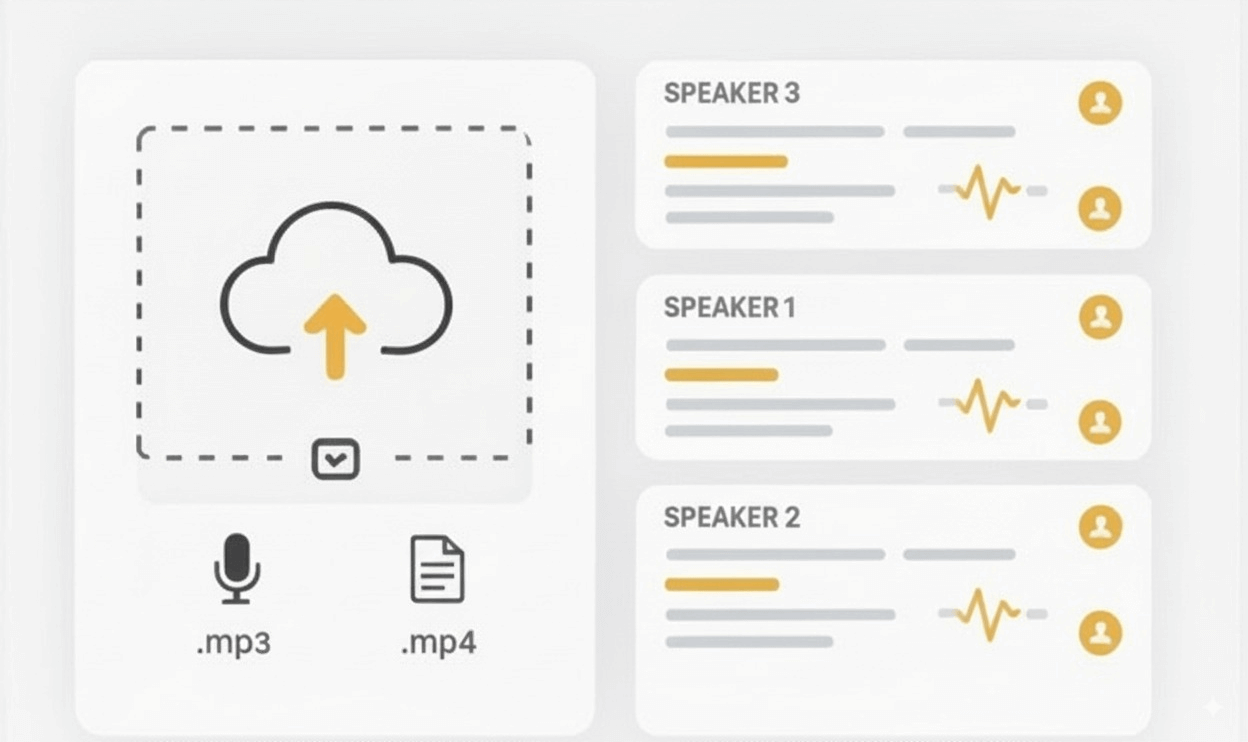

Upload or record interviews. You can drag and drop files from your computer or record directly using your microphone. The service supports many file formats, so you don't need to convert recordings before uploading.

Speech is converted to text within minutes using models trained for research topics, ensuring accurate recognition of complex scientific terms. The system performs reliably even with background noise and in mixed-language conversations common in international research.

Review transcription results in a clean, intuitive editor. Work together with your team by sharing the transcript and connecting it to tools like Slack or Google Chat.

Final transcripts can be exported in different formats, or sent directly to research and analysis software to streamline workflows and save time.

From interviews to classroom recordings, SpeechText.AI helps researchers save time, improve accuracy, and make their data easier to analyze

A short, researcher-focused guide to recording, transcribing, and preparing interviews for analysis, complete with a checklist, consent template, and citation examples.

Prepare recordings for accurate research transcription. Use a short consent script, choose a lossless format (WAV/M4A), and test microphone levels before starting.

Follow simple recording rules to improve automatic transcription accuracy: keep speakers close to the mic, ask one person to speak at a time, and state participant names before speaking for easier diarization.

Upload your recording to SpeechText.AI to generate a fast, editable transcript. Use the collaborative editor to clean speaker labels and export time-coded transcripts for NVivo, Atlas.ti or subtitle workflows.

Suggested script:

"This interview will be recorded for research purposes. Recordings will be transcribed using an AI transcription service (EU-hosted and GDPR-compliant). Transcripts may be reviewed and edited by the research team; recordings and final transcripts will be stored securely. Your participation is voluntary and you may stop the recording at any time. Do you consent to the recording, automated transcription, and use of the transcript for research?"

Download editable templates: Consent Template (.docx)

Participant Lastname, Initials. (Year, Month Day). Interview topic [Unpublished interview transcript].

Lastname, Firstname. Interview. Date of interview. Transcript.

Interviewee First Last, interview by Interviewer First Last, Month Day, Year, transcript.

Tip: include an internal transcript ID, interview date and storage location in your archive metadata for reproducibility.

Download: NVivo import cheat-sheet (PDF)

SpeechText.AI is EU-hosted by default: all production servers are located in Europe, and the service is designed to meet GDPR requirements for data residency and privacy. We apply multiple layers of technical protection across the service: HTTPS is enforced for all traffic, uploads are encrypted at the server side, and network-level protections such as firewalls and IP filtering help block untrusted traffic. You retain full ownership of anything you upload to SpeechText.AI, and you can export or permanently delete uploaded files and transcription results from your dashboard; deletion is irreversible.

Accuracy depends on factors like audio quality, speaker clarity, overlapping speech and complex terminology, but on typical research audio our baseline accuracy exceeds 95%. This baseline reflects automated transcription using domain-trained models tuned for research speech.

Most interviews and lectures are transcribed within minutes depending on length and audio quality. Longer or poor-quality files take proportionally longer.

Plan the recording and capture clean audio: get informed consent on the record, choose a quiet space and a good microphone, and keep a backup of the raw file. Upload the recording to SpeechText.AI (or your chosen service), select an interview/focus-group or domain-trained model and enable speaker diarization so the AI can separate speakers and produce time-coded output.

Edit and verify inside the transcript editor using confidence scores and short audio snippets to fix domain terms, then export time-coded transcripts to DOCX/TXT/CSV or formats for NVivo/Atlas.ti. Apply redaction or anonymization where needed, keep an archived metadata record (IDs, date, consent), and optionally request human review for publication-grade accuracy.

Be clear about your research question and design an interview guide with focused prompts so every recording collects the data you need. Get informed consent on the record, decide on data residency and storage rules up front, and test your recording setup (microphone placement, levels, and a quiet environment). Also run a short pilot to surface any practical issues before you begin full data collection.

During the interview, build rapport and give simple instructions so participants speak clearly and one at a time. Announce participant names or IDs at the start of each turn to help later speaker identification. Monitor recording quality as you go and keep a secondary backup when possible so you never lose original audio if a file is corrupted.

After the session, immediately back up the raw file and upload it to your transcription workflow, choosing interview-optimized or domain-trained settings and enabling speaker diarization.

Reduce bias before you record by designing neutral, open-ended prompts and by piloting your interview guide. Write standard instructions and question wording so every participant receives the same prompts, and train interviewers on neutral probing. Decide upfront on sampling and recruitment strategies that minimise selection bias, and document these decisions in the study protocol.

During the interview, focus on rapport and clear instructions that encourage honest answers. Remind participants that there are no right or wrong responses and consider anonymizing identifiers to lower social-desirability effects. Keep speakers separate when possible (one voice at a time) and monitor audio quality so transcription doesn't introduce bias from inaudible passages.

After transcription, reduce analytic bias with transparent, reproducible procedures: use verbatim transcripts, record reflexive field notes, apply triangulation by combining interview data with documents, observations or surveys, use member-checking to validate interpretations with participants when appropriate, and keep an audit trail of coding decisions and changes.

Finally, use technical safeguards that support unbiased outputs: choose transcription settings optimized for interview audio and domain vocabulary, review low-confidence segments rather than trusting raw AI output. Report your methods clearly (how interviews were conducted, how transcripts were produced and checked) so readers can assess potential bias and the steps you took to minimise it.

To transcribe oral histories in education, begin with a high-quality recording and clear consent from participants about how their stories will be used and stored. Use a quiet environment, good microphones, and back up the raw files before uploading them to a transcription service. Selecting a model trained for interviews or academic speech ensures better handling of narrative style, pauses, and multiple speakers, which are common in oral histories.

Once transcribed, edit the text for accuracy by correcting transcription mistakes and labeling speakers. Depending on your project goals, you may choose verbatim transcription (capturing every pause, filler word, and emotion) or a cleaned transcript for easier reading. Apply anonymization or redaction if sensitive details are shared, then export transcripts to DOCX, TXT, or formats compatible with qualitative analysis software.

Start by ensuring high-quality recordings: use a quiet room, test levels, and place multiple mics so overlapping speech is captured clearly. Decide on your transcription style: verbatim (including pauses, fillers, overlaps) to preserve interactional nuance, or "cleaned" for readability, and apply it consistently. Label speakers from the start (e.g., Moderator, P1, P2), insert regular timestamps (e.g., every 30-60 seconds or before key segments), and note salient nonverbal cues when they inform meaning (laughter, long pause, emphasis). When talk overlaps, mark it clearly (e.g., bracketed notes) so you don't lose group dynamics.